By Steven Schultz

Felix Heide’s goal was big: help computers see.

He did not know he was starting on a path to develop artificial intelligence that uses light instead of electricity, requires vastly less energy than a conventional computer, and produces results hundreds of times faster.

“The question for me was always how can we use algorithms to sense and understand the world?” said Heide, an assistant professor of computer science at Princeton University.

At first, Heide, who joined the faculty in 2020, focused on using machine learning to more effectively draw information from light captured by cameras. He succeeded in creating cameras that use visible light or radar signals to detect objects around blind corners and see through fog — important goals for aiding drivers or ensuring the safety of autonomous vehicles.

Soon, however, Heide realized that making more progress would require rethinking what a camera is, what a lens is.

With his first graduate student, Ethan Tseng, Heide began to explore metasurfaces — materials whose geometry gives them special properties. Instead of bending light inside a piece of glass or plastic, like a conventional lens, a metasurface diffracts light around tiny objects, like light spreading out as it passes through a slit. To build metasurfaces, Heide and his team struck up a collaboration with the lab of Arka Majumdar at the University of Washington, who are experts in ultra-small devices that control the interaction of light and matter.

“This is a completely new way of thinking about optics, which is very different from traditional optics,” Majumdar said in a news story published by the University of Washington.

In their first breakthrough, the combined research team built a high-resolution camera smaller than a large grain of salt. First reported in 2021 and now widely cited in scientific journals and by news media, their tiny camera depended on the exact positioning of millions of tiny pillars on a metasurface. The team used machine learning to arrange the pillars — each about a 1000th of a millimeter tall and much narrower in width — to make the maximum use of the light hitting the device.

Like a conventional lens, this surface focused the light onto a sensor, but it extracted a lot of information from a very small amount of light. The team wrote software that reconstructed this compressed information into surprisingly crisp color pictures.

Surpassing an AI benchmark

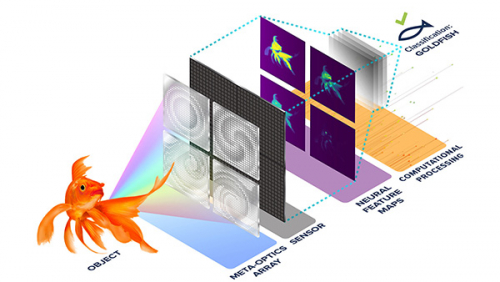

Then the researchers realized something profound. The light coming out of these complex arrays of pillars did not need to look to a human eye at all like the object that produced the image. The pillars could function as highly specialized filters that organized the optical information into categories, such as edges, light areas, dark areas, or even qualities that a human viewer might not be able to perceive or make sense of, but that could be useful to a computer that receives this pre-processed information.

Suddenly their lens was not just benefiting from artificial intelligence, it was performing functions a lot like what happens in the most sophisticated AI systems that recognize images. Could the lens itself tell the difference between a dog and a horse?

“We realized we don’t need to record a perfect image,” Heide said. “We can record only certain features that we can then aggregate to perform tasks such as classification.”

The team pressed ahead with this idea and by the end of 2024, they succeeded in creating a system that could identify an object in an image using less than 1% of the computing needed by conventional techniques. Their metasurface lens — actually an array of metasurface lenses — had done 99.4% of the work.

In doing so, the team surpassed a key benchmark. Computer vision burst into practical use around 2012 when an artificial intelligence system called AlexNet achieved an unprecedented level of accuracy in identifying images. Heide’s system has now beaten the performance of AlexNet when tested against industry-standard databases of images, such as the ImageNet library developed at Princeton.

AlexNet “started this whole deep neural network revolution that we have seen play out over the last decade,” said Heide. Now, he said, it appears within grasp for optical systems to start a new revolution in ultra-fast, low-energy-use artificial intelligence.

“This could potentially extend beyond image processing, and this is where we are just touching the tip of the iceberg,” Heide said.

Francesco Monticone, an associate professor of electrical and computer engineering at Cornell University, said the work by Heide and Majumdar “elegantly and convincingly demonstrates the promise of optical metasurfaces” for low-power, low-latency computing systems. Monticone said that the ability of metasurfaces to perform many operations in parallel and to be finely manipulated across many variables could offer advantages over electronic processors and that Heide and Majumdar’s findings “represent a major step in the quest for ‘optical advantage’ in computing.”

Building on a Princeton legacy

The artificial intelligence that Heide’s lens achieved is rooted in the work of Princeton physicist and neuroscientist John Hopfield, who won a Nobel Prize last year for introducing the idea of an artificial neural network. Hopfield was interested in how the brain learns and remembers. In 1982, he proposed computer algorithms that represent neurons mathematically and represent learning and memory by adjusting the strength of connections between the virtual neurons.

Soon after that researchers around the world began trying to construct Hopfield networks using light signals. The prospect of using light to compute is tantalizing. Fast as electrons are, photons are faster. Nothing moves faster than the speed of light.

As a practical matter, however, it was the electrical, computer-chip-based systems that eventually took off. The systems that drive today’s artificial intelligence require trillions upon trillions of calculations to adjust the virtual connections in Hopfield’s network, eating up enormous amounts of computing power and energy.

Heide’s system offers a different paradigm. His optical system can perform the equivalent of hundreds of millions of computer calculations (called floating point operations or FLOPS) in an instant. Like Heide’s diffractive “filters,” computer-based neural networks apply mathematical filters (known as kernels) to their input data to extract specific pieces of information. Looking at even a few pixels of a picture requires a considerable number of calculations, so modern AI systems look for very specific features in a small number of pixels at a time. In Heide’s system, large and complex filtering operations happen naturally as light passes through, which allows for a few large complex filters, or kernels, to analyze the whole image at once. In each metasurface lens within the array, the tiny pillars reorganize and abstract the light without any electrical input or active control.

Heide and Tseng said this concept of a few large optical “kernels” as opposed to many small ones is key to their success. Another key is the integration of hardware and software.

In a sense, Tseng said, what the team is doing is like the evolution of animal vision.

“Animal vision is joint between optical hardware and neural back-end processing,” Tseng said, citing examples of the mantis shrimp, dragonfly, or cuttlefish that can sense a property of light waves called polarization, which conventional optics don’t pick up. “There are animals that have more exotic vision than what we have, and we suspect that the hardware of their eyes is working together with their brain to perform various tasks.”

For Heide, the freedom to follow his curiosity and stray from pure computer science into the different field of optics is unique to universities. As was his move from the specific quest to improve cameras to the threshold of a broad new computing paradigm.

“Often I get the question: Working in AI, how do you compete with industry?” Heide said. “This is a perfect example of something that would have never happened in industry because it’s at the intersection of disciplines. It’s so disparate that we wouldn’t have found it without that freedom.”