By Julia Schwarz

Working at the intersection of machine learning, 3D-printing and fine art, researchers at Princeton have found a simple new way to fabricate surfaces that show different images depending on your viewing angle.

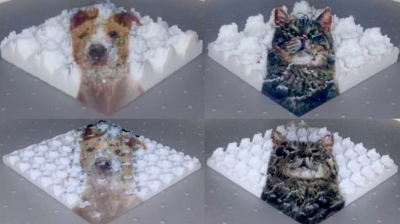

The images are not drawn on the object — the way the object’s surface is constructed creates the effects. The object is made up of many tiny bars, each less than a centimeter wide and printed at different heights and in different colors. “Like a complex 3D bar chart,” said Maxine Perroni-Scharf, former Princeton CS graduate student and first author on a new paper about the fabrication process.

All these tiny bars, when viewed from a certain direction, display a composite image. That image changes as the viewing angle changes. From one angle, you might see a cat. Rotate the object and you might see a dog. Rotate it further and there’s a bird. The researchers fabricated objects that included up to five unique images, viewable from five different angles.

Fabricating such a complex set of surfaces is a computational challenge. Using insights from machine learning, the researchers solved this challenge by designing an algorithm that translates digital images into code that the 3D printer can use for fabrication.

According to Perroni-Scharf, who completed her master’s degree in May, this new approach is notable for its automation and simplicity. Other methods used to fabricate objects with multiple view-dependent images require several additional steps and more advanced hardware, she said. This new approach also yields an image that has a higher resolution than many other methods.

The research was completed as Perroni-Scharf’s master’s thesis in collaboration with her advisor, Szymon Rusinkiewicz, David M. Siegel ‘83 Professor and chair of the department. “Maxine's work is a great example of cutting-edge research performed by a student for an MSE thesis and published at the very top conference in her subfield,” said Rusinkiewicz.

The research was initially spurred by Perroni-Scharf’s background in fine art — one of her goals was to “create a really cool looking object.” But it also fit with her broader interest in exploring the intersections between machine learning and the tangible world. She plans to pursue this interest further at the Massachusetts Institute of Technology, where she will join the doctoral program in computer science in the fall.

Initially, she wasn’t sure she wanted to do a Ph.D., she said. But the MSE program at Princeton, which includes a track specifically for research, gave her “a great opportunity to figure that out.” Getting to be part of a lab and do graduate-level work confirmed her interest in going on to complete a doctoral degree.

The department’s master’s of science in engineering program is unique, Rusinkiewicz added, because “it’s one of the very few opportunities in the country for students from every background to both take courses and perform world-class research in a fully-supported program.”

The paper, “Constructing Printable Surfaces with View-Dependent Appearance,” will be presented at the SIGGRAPH conference in Los Angeles, CA on Wednesday, August 9. The research was supported by the Princeton Robotics Lab and the Princeton School of Engineering and Applied Sciences.