|

COS 429 - Computer Vision

|

Fall 2019

|

Assignment 2: Face Detection and Model Fitting

Due Thursday, Oct. 17

Part II. Training a Face Classifier

Dalal-Triggs:

The 2005 paper by Dalal and Triggs

proposes to perform pedestrian detection by training a classifier on

a Histogram of Gradients (HoG), then applying that classifier throughout

an image. Your next task is to train that classifier, and test how well

it works.

Dalal-Triggs:

The 2005 paper by Dalal and Triggs

proposes to perform pedestrian detection by training a classifier on

a Histogram of Gradients (HoG), then applying that classifier throughout

an image. Your next task is to train that classifier, and test how well

it works.

Training:

At training time, you will need two sets of images: ones containing faces

and ones containing nonfaces. The set of faces provided to you comes from

the

Caltech 10,000 Web Faces dataset. The dataset has been trimmed to 6,000

faces, by eliminating images that are not large or frontal enough. Then,

each image is cropped to just the face, and is resized to a uniform 36x36

pixels. All of these images are grayscale.

The non-face images come from

Wu et al. and the

SUN scene database.

You'll need to a bit more work to use these, though, since they come in

a variety of sizes and include entire scenes. So, you will need to

randomly sample patches from these images, and resize each patch to be

the same 36x36 pixels as the face dataset.

Once you have your positive and negative examples (faces and nonfaces,

respectively), you'll compute the HoG descriptor (a partial implementation of

which is provided for you) on each one. Finally, you'll train the logistic

regression classifier you wrote in Part I to run on the feature vectors.

As mentioned in the

Dalal and Triggs paper and

in class,

the HoG descriptor has a number of parameters that affect its performance.

Two of these are exposed as inputs to the hog36 function, but the code to use them must be implemented. These parameters are the number of orientations in each bin,

and whether those orientations cover the full 360 degrees or

orientations 180 degrees apart are collapsed together. Implement the code needed to use the parameters orientations and wrap180, and then experiment

with these parameters to see whether the conclusions reached in the paper

(i.e., performance does not improve beyond about 9 orientations, and it

doesn't matter whether or not you wrap at 180 degrees) hold equally well

for face detection as for pedestrian detection.

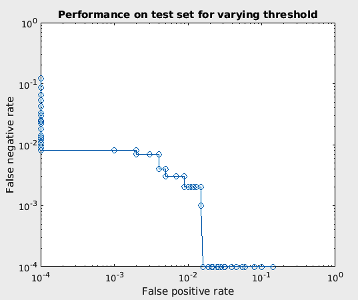

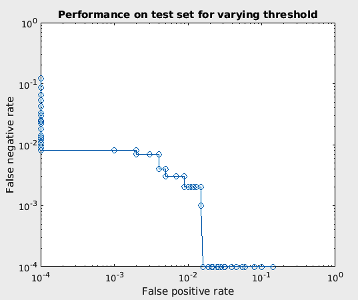

Predicting:

One of the nice things about logistic regression is that its output ranges

from 0 to 1, and is naturally interpreted as a probability. (In fact,

using logistic regression is equivalent to assuming that the two classes are

distributed according to Gaussian models with different means but the same

variance.) In lecture, we thresholded the output of the learned model to

get a 0/1 prediction for the class, which effectively thresholded at

a probability of 0.5. But for face detection you may wish to bias the

detector to give fewer false positives (i.e., be less likely to mark

non-faces as faces) or fewer false negatives (i.e., be less likely to mark

faces as non-faces). Therefore, you will look at graphs that plot the

false-negative rate vs. false-positive rate as the threshold of

probability is changed. Curves that lie closer to the bottom-left corner

indicate better performance. Of course, you will look at performance on both

the training set (for which you expect great performance) and a separate

test set (which may or may not perform as well).

Predicting:

One of the nice things about logistic regression is that its output ranges

from 0 to 1, and is naturally interpreted as a probability. (In fact,

using logistic regression is equivalent to assuming that the two classes are

distributed according to Gaussian models with different means but the same

variance.) In lecture, we thresholded the output of the learned model to

get a 0/1 prediction for the class, which effectively thresholded at

a probability of 0.5. But for face detection you may wish to bias the

detector to give fewer false positives (i.e., be less likely to mark

non-faces as faces) or fewer false negatives (i.e., be less likely to mark

faces as non-faces). Therefore, you will look at graphs that plot the

false-negative rate vs. false-positive rate as the threshold of

probability is changed. Curves that lie closer to the bottom-left corner

indicate better performance. Of course, you will look at performance on both

the training set (for which you expect great performance) and a separate

test set (which may or may not perform as well).

Do this:

- Install SciPy. With the environment activated, run the following line:

- conda install -c anaconda scipy

- Download the starter code and dataset

(about 30 MB) for this part. It contains the following files:

- logistic_prob.py - you will modify this to compute the

predicted probability that new datapoints belong to class 1 (vs 0),

given a model trained by logistic_fit.

- get_training_data.py - you will modify this to create a matrix

of training data, including both positive and negative examples (i.e., faces

and nonfaces).

- get_testing_data.py - in a similar way, you will implement this

function to create a matrix of testing data.

- test_face_classifier.py - this function calls the above two

functions, calls logistic_fit to learn a model, and produces

graphs of false-negative vs false-positive rate.

- hog36.py - A simple implementation of the HoG descriptor,

specialized for 36x36 images, 6x6 cells, and 2x2 blocks. It currently runs with a fixed number of orientations and no wrapping at 180 degrees, but you will modify it to allow a variable number of orientations and optional wrapping at 180 degrees.

- face_data/training_faces/*.jpg - 6,000 images, 36x36, grayscale,

each containing one centered face

- face_data/training_nonfaces/*.jpg - 250 images, varying sizes, color

- face_data/testing_faces/*.jpg - 500 images, 36x36, grayscale,

each containing one centered face

- face_data/testing_nonfaces/*.jpg - 500 images, 36x36, grayscale

- Copy over logistic_fit.py from part I; you will need it for this part.

Do this and turn in:

- Implement logistic_prob.py,

get_training_data.py and get_testing_data.py. Look for

sections marked "Fill in here". The trickiest part is likely to be

selecting random squares (i.e., random positions and random sizes no

smaller than 36x36) from the nonface images. Turn in these three files.

- Run

from test_face_classifier import test_face_classifier

test_face_classifier(250, 100, 4, True)

in the Python console which trains the classifier on 250 faces and 250 nonfaces, tests it on

100 faces and 100 nonfaces, using 4 orientations that are wrapped

at 180 degrees. If all goes well, the training should complete in a few

seconds, and you should see two plots (again, one by one), for training and testing

performance. The training plot should be boring: running down the bottom

and left sides of the graph, indicating perfect performance. The testing

plot, though, should indicate imperfect performance. Turn in the training and testing plots.

- Train the classifier on 6,000 faces and 6,000 nonfaces, and test it on 500 faces and 500 nonfaces.

The training time will take longer,

but should still finish in a few minutes, depending on your CPU.

Note that with the same test set, the testing accuracy should increase significantly with more training data.

Turn in the training and testing plots.

- Train the classifier with increasing the number of orientations from 4 to 6, 9, 12. Use 12,000 training images (6,000 faces and 6,000 nonfaces) and 1,000 test images (500 faces and 500 nonfaces) as before. Briefly describe what happens to test accuracy.

- Modify hog36.py to disable wrapping of orientations at 180 degrees. Turn in hog36.py. Train the classifier with 12,000 training images (6,000 faces and 6,000 nonfaces), 1,000 test images (500 faces and 500 nonfaces),

9 orientations, and no wrapping. Do you see the same behavior as Dalal and Triggs, in that turning off

the wrapping of orientations at 180 degrees makes little difference to

accuracy? Briefly explain why (or why not) that is the case.

- In parts III and IV of this assignment, you will run this detector at

many locations throughout an image that may or may not contain some faces.

Would you prefer to run the detector with a threshold that favors fewer

false positives, fewer false negatives, or some balance? Briefly explain why.

Acknowledgment: idea and datasets courtesy James Hays.

Last update

24-Oct-2019 16:41:21

Dalal-Triggs:

The 2005 paper by Dalal and Triggs

proposes to perform pedestrian detection by training a classifier on

a Histogram of Gradients (HoG), then applying that classifier throughout

an image. Your next task is to train that classifier, and test how well

it works.

Dalal-Triggs:

The 2005 paper by Dalal and Triggs

proposes to perform pedestrian detection by training a classifier on

a Histogram of Gradients (HoG), then applying that classifier throughout

an image. Your next task is to train that classifier, and test how well

it works.

Predicting:

One of the nice things about logistic regression is that its output ranges

from 0 to 1, and is naturally interpreted as a probability. (In fact,

using logistic regression is equivalent to assuming that the two classes are

distributed according to Gaussian models with different means but the same

variance.) In lecture, we thresholded the output of the learned model to

get a 0/1 prediction for the class, which effectively thresholded at

a probability of 0.5. But for face detection you may wish to bias the

detector to give fewer false positives (i.e., be less likely to mark

non-faces as faces) or fewer false negatives (i.e., be less likely to mark

faces as non-faces). Therefore, you will look at graphs that plot the

false-negative rate vs. false-positive rate as the threshold of

probability is changed. Curves that lie closer to the bottom-left corner

indicate better performance. Of course, you will look at performance on both

the training set (for which you expect great performance) and a separate

test set (which may or may not perform as well).

Predicting:

One of the nice things about logistic regression is that its output ranges

from 0 to 1, and is naturally interpreted as a probability. (In fact,

using logistic regression is equivalent to assuming that the two classes are

distributed according to Gaussian models with different means but the same

variance.) In lecture, we thresholded the output of the learned model to

get a 0/1 prediction for the class, which effectively thresholded at

a probability of 0.5. But for face detection you may wish to bias the

detector to give fewer false positives (i.e., be less likely to mark

non-faces as faces) or fewer false negatives (i.e., be less likely to mark

faces as non-faces). Therefore, you will look at graphs that plot the

false-negative rate vs. false-positive rate as the threshold of

probability is changed. Curves that lie closer to the bottom-left corner

indicate better performance. Of course, you will look at performance on both

the training set (for which you expect great performance) and a separate

test set (which may or may not perform as well).