© xkcd.com

© xkcd.com

© xkcd.com

The combination of steadily increasing computing power and memory with a huge amount of data has made it possible to attack many long-standing problems of getting computers to do tasks that normally would require a human. Artificial intelligence, machine learning, and natural language processing (AI, ML, NLP) have been very successful for games (computer chess and Go programs are better than the best humans), speech recognition (think Alexa and Siri), machine translation, and self-driving cars.

There are zillions of books, articles, blogs and tutorials on machine learning, and it's hard to keep up. This overview, Machine Learning for Everyone, is an easy informal introduction with no mathematics, just good illustrations. YMMV, of course, but take a look.

This lab is an open-ended exploration of a few basic topics in NLP and other kinds of ML. The hope is to give you at least some superficial experience, and as you experiment, you should also start to see how well these systems work, or don't. Your job along the way is to answer the questions that we pose, based on your experiments. Include images that you have captured from your screen as appropriate. Submit the result as a web page, using the mechanisms that you learned in the first two or three labs. No need for fancy displays or esthetics; just include text and images, suitably labeled. Use the template in the next section so we can easily see what you've done.

This is a newish lab so it still has rough edges. Don't worry about details, but if you encounter something that seems seriously wrong, please let us know. Otherwise, have fun and see what you learn.

HTML template for your submission

Part 1: Word Trends and N-grams

Part 2: Sentiment Analysis

Part 3: Machine Translation

Part 4: Image Generation

Part 5: Machine Learning

Submitting your work

| In this lab, we will highlight instructions for what you have to submit in a yellow box like this one. |

For grading, we need some uniformity among submissions, so use

this template

to collect your results as you work through the lab:

The Google Books project has scanned millions of books from

libraries all over the world. As the books were scanned, Google used

optical character recognition on the scanned material to convert it into

plain text that can be readily searched and used for language studies.

Google itself provides a web-based tool, the

Google Books Ngram Viewer,

that shows how often words and phrases have been used in a variety

of corpora. (An n-gram is just a phrase of n words that occur in

sequence.)

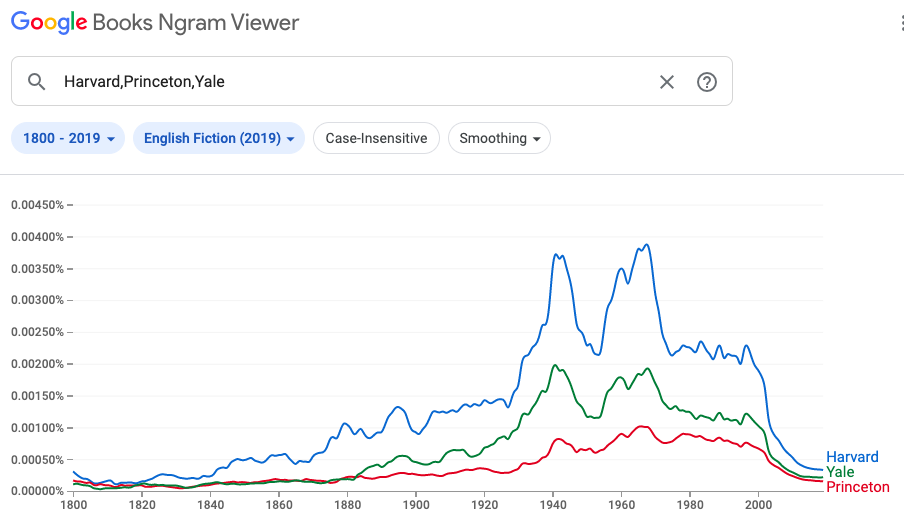

Word usage over time is often revealing and interesting. For

example, the graph of "Harvard, Princeton, Yale" shows that "Harvard"

occurred much more often than "Princeton" or "Yale", but Princeton

mentions have grown steadily, while Harvard seems to have plateaued

and Yale is sinking. (Do not read anything into these observations!)

But the same search over "English Fiction" shows quite

a different story:

What's going on here? All three names had periods of use but

seem to have died away, at least comparatively. Food for thought,

perhaps?

In this section of the lab, your task is to play with the n-gram

viewer and provide a handful of results that you found interesting or

worth further exploration. What you look at is up to you, though

general areas might include names of places or people, major events,

language evolution, correlation of words and phrases with major world

events or social trends. You must provide at least two graphs that use some

of the advanced features described on the

how it works page.

HTML template for your submission

<html>

<title> Your netid, your name </title>

<body>

<h3> Your netid, your name </h3>

Any comments that you would like to make about the lab,

including troubles you had, things that were interesting,

and ways we could make it better.

<h3>Part 1</h3>

<h3>Part 2</h3>

<h3>Part 3</h3>

<h3>Part 4</h3>

<h3>Part 5</h3>

<h3>Part 6</h3>

</body>

</html>

If you include big images, please limit their widths to

about 80% of the page width, as in earlier labs.

Put a copy of this template in a file called lab7.html and as you work

through the lab, fill in each part with what we ask for, using HTML tags

like the ones that you learned in the first few labs.

Part 1: Word Trends and N-grams

Include at least two graphs that use some advanced feature of the Ngram

viewer, with a paragraph or two that explains what you did, what

advanced feature was used, and what your graphs show.

Part 2: Sentiment Analysis

"Sentiment analysis" refers to the process of trying to determine whether a piece of text is fundamentally positive or negative; this has many applications in trying to understand customer feedback and reviews, survey responses, news stories, and the like.

Sentimood is a very simple-minded sentiment analyzer that basically just counts words with generally positive or negative connotations and computes some averages. You can see the list of words and their sentiment value by "View Source" or clicking the sentimood.js file in your browser.

If you paste some text into the window, it will give you a score that indicates whether the text is positive or negative in tone, along with the words that led it to its conclusion. There's no limit to how much text you can give it, but a few hundred words is plenty.

One problem with Sentimood is that it doesn't understand English at all; it's just counting words. Could we do better by parsing sentences, perhaps to detect things like negation ("He is not an idiot") or qualification by a clause ("A bit slow but certainly not an idiot") or irony ("My, that is a baby, isn't it?").

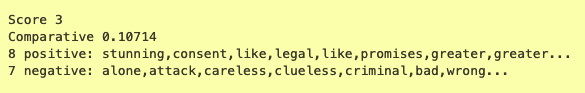

More sophisticated sentiment analyzers sometime do a better job of parsing English, and thus are better at assessing sentiment, though they are easily fooled. For example, this demo of a commercial service might best be described as "mixed." The input

The course will have fundamentally the same structure as in previous years, but lectures, case studies and examples change every year according to what's happening. Stunning amounts of our private lives are observed and recorded by social networks, businesses and governments, mostly without our knowledge, let alone consent. Companies like Amazon, Apple, Facebook, Google and Microsoft are duking it out with each other on technical and legal fronts, and with governments everywhere. Shadowy groups and acronymic agencies routinely attack us and each other; their potential effect on things like elections and critical infrastructure is way beyond worrisome. The Internet of Things promises greater convenience at the price of much greater cyber perils. The careless, the clueless, the courts, the congress, the crazies, and the criminal (not disjoint groups, in case you hadn't noticed) continue to do bad things with technology. What could possibly go wrong? Come and find out.is characterized this way:

It doesn't look very accurate and it would not encourage me to pay money for the service but it's a stab at something useful.

Arguably, Sentimood gives more useful information on that specific text:

|

Find two words in Sentimood's list that could be either positive or negative, depending on context and interpretation. Find two words where you think the weighting is seriously wrong? Try some sentences from literature, your own writing, tweets, or whatever, with both Sentimood and the commercial analyzer. Give two examples of sentences where they agree and both appear to be correct. (If you wish, find another sentiment analyzer and use it instead.) Give two examples where they differ markedly in their assessment. Give two examples where they agree and both appear to be clearly wrong. |

The classic challenge is translating the English expression "the

spirit is willing but the flesh is weak" into Russian, then back to

English. At least in legend, this came out as "the vodka is strong but

the meat is rotten." Today, the Russian is "дух хочет, но плоть слаба",

and the English is "the spirit desires, but the flesh is weak",

which isn't great but at least gets the idea across.

(Bing Translate produces similar results,

"Дух желает, но плоть слаба".)

In this section, you have to experiment with

Google Translate

and one of its competitors like

Bing or

Deepl

to get a sense of what works well today and what is not quite

ready to replace people.

Try 2 inputs from different sources, like the first few lines of

novels, or cliches, or news stories, with two different translation

systems. Run them through another language that you know and then back

to English. Include at least two examples that work well and two that

are spectacularly wrong.

How well do your translation services do on your chosen language?

Would it be useful in practice?

Do you see meaningful differences between different services?

If your html page doesn't display non-ASCII characters properly,

you may have to tell the browser to use UTF-8. This incantation at

the beginning of your lab7.html should help:

Machine learning models have been steadily improving in

their ability to generate language and images that can often

appear as if they were generated by people.

One of the most interesting examples marries language

models to image tags to generate surprisingly creative images

from a "prompt", perhaps a dozen words that approximately

describe an image that might exist in the world, or might be entirely

synthetic. We saw a stunning example early in the semester, the

AI-generated Théâtre D’opéra Spatial:

Most such images are nowhere near that good, but they do

show considerable promise.

For example, this pair of images comes from the prompt

"A bearded elderly male professor teaching programming

to a class of bored humanities majors":

More seriously, some prompts produce remarkably good

results; for instance,

"a gray cat sitting at a door watching squirrels in the

style of van gogh's starry night":

The purpose of this section is for you to explore this area

a little, generate a handful of images that you like, and have

some fun while seeing what works and what doesn't yet.

The experiments are based on

DALL-E2,

a machine-learning model that creates images from short text

descriptions in ordinary language; it's based on a modification of the

text generation system

GPT-3, a

deep-learning system that uses a natural-language prompt

to generate a sequence of text that is in some sense a natural

extension of the prompt.

Both of these systems are fun to play with, and impressive

in their capabilities. Archie suggests some tips that might

help image quality:

For each set, include a screenshot like the ones above, and make sure

you include the prompt. For each, describe how you evolved the prompts

before you settled on your final version.

After training, the algorithm classifies new items, or predicts

their values, based on what it learned from the training set.

There is an enormous range of algorithms, and much research in

continuing to improve them. There are also many ways in which machine

learning algorithms can fail -- for example, "over-fitting", in which

the algorithm does very well on its training data but much less well on

new data -- or producing results that confirm biases in the training

data; this is an especially sensitive issue in applications like

sentencing or predicting recidivism in the criminal justice system.

One particularly effective kind of ML is called "deep learning"

because its implementation loosely matches the kind of processing

that the human brain appears to do. A set of neurons observe low-level features;

their outputs are combined into another set of neurons that observe

higher-level features based on the lower level, and so on.

Deep learning has been very effective in image recognition, and

that's the basis of this part of the lab.

Google provides

Teachable Machine,

an interface that uses the camera on a computer to train a neural

network on multiple visual or auditory inputs; the interface looks like this:

I trained the network on two images, holding a pen in one

of two orientations. It's an easy case, and the recognizer is quite good at

distinguishing them.

For each experiment, describe what you tried, why you chose it, and

how well it worked. How many training examples were necessary? How did

it improve, if at all, with more training?

Include a screenshot like the one above.

Make sure your lab7.html, including all images, is accessible at

https://your_netid.mycpanel.princeton.edu/lab7.html.

Ask a friend to view your page and check all the links from his or her

computer.

When you are sure that your page is displaying correctly, upload

your lab7.html and other files to

https://tigerfile.cs.princeton.edu/COS109_F2022/Lab7.

Part 3: Machine Translation

Computer translation of one human language into another is a very

old problem. Back in the 1950s, people confidently predicted that

it would be a solved problem in the 1960s. We're still not there

yet, though the situation is enormously better than it was,

thanks to lots of computing power and very large collections of

text that can be used to train machine-learning algorithms.

<!doctype HTML>

<HTML>

<meta http-equiv="content-type" content="text/html; charset=utf-8" />

Part 4: Image Generation

.

.

Using DALL-E2 at

openai.com,

create two distinctly different sets of images. (You will have

to register to use the service.)

One set should be pretty representational, like the ones above. The

other set should be non-representational, something that is not likely

to exist in the real world ("a tyrannosaurus sword-fighting with

Spiderman on mars"). This isn't meant to be prescriptive, however, so

create something that you really like -- be imaginative!

Part 5: Machine Learning

"Machine learning algorithms can figure out how to perform important

tasks by generalizing from examples."

Most machine-learning algorithms have a similar structure. They "learn"

by processing a large number of examples that are labeled with the

correct answer, for example, whether some text is spam or not, or which

digit a hand-written sample is, or what kind of animal is found in a

picture, or what the price of a house is. The algorithm figures out

parameter values that enable it to make the best classifications or

predictions based on this training set.

Using the deep learning link above, do two distinctly different

projects using the camera and/or microphone in your computer. This might be

images of yourself in various attire, or of you and friends, or

singing, or lots of other things -- be imaginative!

Submitting Your Work