Programming Assignment 1

Yiming Liu (yimingl@princeton.edu)

Oct 9

Approximate Nearest Neighbor Search Acceleration

I have implemented the ANN acceleration in my program. The ANN library is very convenient to use. Its interface is self-explanatory.

However, in fact, without ANN library, we still have lots of intuitive techniques to accelerate the nearest neighbor search. The most important ones are:

- Collect the neighborhoods on A and A' before synthesis. If we collect them on the fly, we need to collect all of them for each pixel on B'. If we collect the neighborhoods before synthesis, this collection is only needed once. This pre-collection is required by the ANN library as well.

- Decompose the euclidean distance computation ||u-v||2 = u2 + v2 - dot(u, v). Suppose we need to compute the distance of a vector u in B and B' to a set of of vector v's in A and A'. u2 can be discarded because all distances have this component. All v2 can be precomputed and reused. So for each distance computation we need d multiplication and 1 subtraction operation, where d is the dimensionality of vectors. Without this decomposition, we need d subtraction plus d multiplication operations. Note that this decomposition will lose some precision for floating-point number computation. In my experiment, it can leads to difference of results between ANN and this method. However, the quality of result is not degraded.

- Use SSE or SSE2 to accelerate vector computation. Element-wise vector operation and dot product operation can be accelerated using SSE and SSE2 instructions. We can pack 4 float variables or 2 double variables into 128-bit registers dedicated to SSE, and perform operations for them simultaneously with only a single CPU thread.

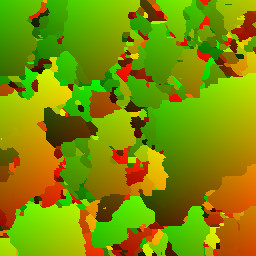

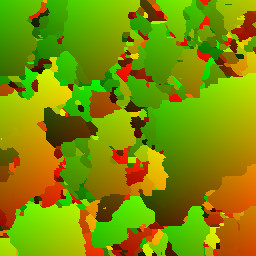

The following images compare the difference between the algorithm without ANN and with ANN. Thanks to the acceleration techniques above, the program without ANN is not much slower than the one with ANN (145.7 seconds vs. 111.8 seconds). Note that because the distance decomposition loses some precision, and some randomness is involved on the coarsest level (I followed Efros' randomness setting on the coarsest level), the two results look quite different. However, their quality is similar.

Common parameters:

- Window size on the finer level: 9x9.

- Window size on the coarser level: 5x5.

- Kappa: 2.0.

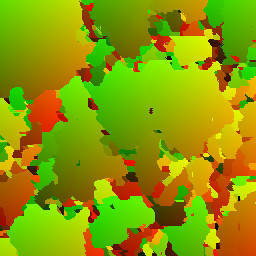

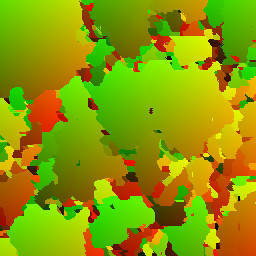

Exemplar:

Result without ANN (145.7 seconds)

Result with ANN (111.8 seconds)