|

COS 323 - Computing for the Physical and Social Sciences |

Fall 2012 |

| Course home | Outline and lecture notes | Assignments |

In this assignment, you will implement nonlinear least squares fitting using Gauss-Newton iteration. You will be fitting to the logistic model, which (under certain assumptions) predicts the probability of occurrence of some event as a function of the values of some explanatory variables.

The logistic model is a generalized linear model, meaning

that the function consists of a single nonlinearity applied to a

linear combination of the variables: $$ p(x) = g(a + b x_1 + c x_2

+ \cdots) $$

The logistic model is a generalized linear model, meaning

that the function consists of a single nonlinearity applied to a

linear combination of the variables: $$ p(x) = g(a + b x_1 + c x_2

+ \cdots) $$

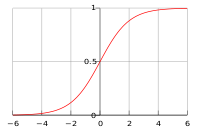

where the nonlinearity is the logistic function, shown at right $$ g(z) = \frac{1}{1 + e^{-z}}. $$

In this case, the explanatory variables are $x_1, x_2, \ldots$, and any given setting of them gives rise to a probability. Fitting the logistic model to data consists of estimating the coefficients $a, b, c, \ldots$ using least squares: $$ \arg\min \; \sum_{i=1}^{n} \bigl(y_i - g(a + b x_{i1} + c x_{i2} + \cdots)\bigr)^2 . $$ For the double-subscripted variables $x_{ij}$, the first subscript refers to the data point and the second refers to which explanatory variable it is. Since for each data point the event actually did or did not occur, the $y_i$ will each be 0 or 1. Note that the above includes a constant term, which was not included in the lecture notes.

You will be using Gauss-Newton iteration to perform the fit - see the motivation behind this in slides 8-18 of Lecture 8. Briefly, you will compute successively better estimates of the parameters via the iteration $$ \begin{pmatrix} a \\ b \\ c \\ \vdots \end{pmatrix}^{\!\!(k+1)} = \begin{pmatrix} a \\ b \\ c \\ \vdots \end{pmatrix}^{\!\!(k)} + \delta^{(k)}, \quad \mathrm{where} \quad J^\mathrm{T} J \, \delta = J^\mathrm{T} r. $$

The matrix $J$ is a Jacobian: the columns correspond to partial derivatives of $p(x)$ with respect to $a, b, c, \ldots$, and each row corresponds to a different data point. The vector $r$ is the residual: each row is $y_i - g(a + b x_{i1} + c x_{i2} + \cdots)$ for a different data point. (The parenthesized superscripts $(k)$ and $(k+1)$ are the values at different iterations.)

To do: Derive the expression for each row in the Jacobian. Remember to use the chain rule for differentiation. Hint: the derivative of the logistic function $g(z)$ may be written as just $g(z)\,(1-g(z))$, which should simplify your expressions (and hence your code).

Implement a Matlab function, or collection of functions, that performs the following:

Hint #1: Use the [U,W,V] = svd(A,0); syntax.

Hint #2: You should never need to compute $J^\mathrm{T} J$ explicitly.

Test your implementation on the file gradschool.csv, which you can load with the command

load -ascii gradschool.csvThe file contains (fake!) data on graduate school admissions:

Try running the regression on several different subsets of the data. What can you say about the stability with which each parameter is recovered?

This assignment is due Tuesday, October 23, 2012 at 11:59 PM. Please see the course policies regarding assignment submission, late assignments, and collaboration.

Please submit:

The Dropbox link to submit your assignment is here.